A Better Acronym for AI: Automated Inference

Scott Hanselman has a fantastic explanation of how the current Large Language Model-backed A.I. chatbots and assistants work, and, as well as being a very approachable and engaging introduction, it also explains why the use of ‘please’ and ‘thank you’ in prompts yields a better experience. Albeit at greater cost the planet.

Scott’s presentation confirmed a sneaking suspicion I’ve had for a while: I think we have the I in A.I. wrong.

Our current LLM approach to making intelligent computers doesn’t meet the definition of intelligence:

Intelligence : “the ability to learn, understand, and make judgments or have opinions that are based on reason”

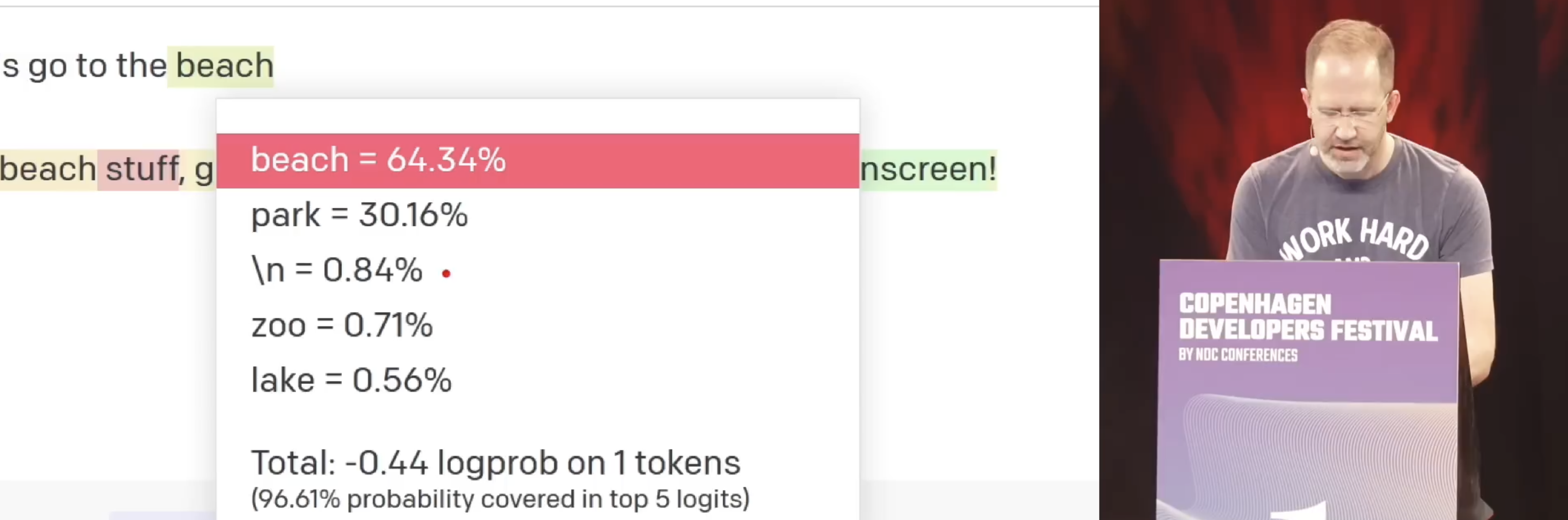

LLMs aren’t based on reason, they produce responses based on probabilities distilled from training data; weights and randomness are applied to the next word in a sentence, the next sentence in a paragraph, etc - their output is essentially guesswork.

LLMs aren’t intelligent.

I think a better acronym for A.I. is Automated Inference.

Automated : “carried out by machines or computers without needing human control”

Inference : “a guess that you make or an opinion that you form based on the information that you have”

Computer Guessing = Automated Inference = A.I.

I find this definition helpful because thinking this way about the technology helps us better use it. Our expectations are lowered and our scepticism is up - we’re more critical of the responses and output they produce, therefore we’re more likely to spot mistakes or bad advice.

All of my writing is licensed under a

All of my writing is licensed under a